Usability Testing App

April 2025 (1 month project) • Solo Designer with 2 Developers

Summary

I designed the Mobile Test task type for Hubble’s usability testing platform, giving researchers a seamless way to capture authentic participant interactions on mobile apps and websites. This capability helped app-based companies gather reliable insights to refine their products and drive sales growth.

Challenge

Business limitation: Lack of mobile testing constrained Hubble’s customer reach and limited sales opportunities with mobile-first companies.

Complex flow with dual entry points: Participants could begin either on desktop or directly in the mobile app, creating added complexity in guiding them smoothly.

Unfamiliar testing process: Most had never done mobile usability testing before, requiring clear instructions for setup, permissions, and task behaviors.

Risk of data loss or errors: Without careful guidance, recordings could fail to upload or link correctly, making results unreliable.

Outcome

By offering mobile usability testing, we expanded our customer pool to mobile-first users and strengthened sales conversations with a broader offering.

Designed a seamless experience that works reliably whether participants start on desktop or directly in the mobile app.

Provided clear, step-by-step guidance for setup, permissions, and test behaviors, reducing confusion and drop-off.

Ensured accurate recording, upload, and linkage to the right study, making mobile test results as trustworthy as desktop ones.

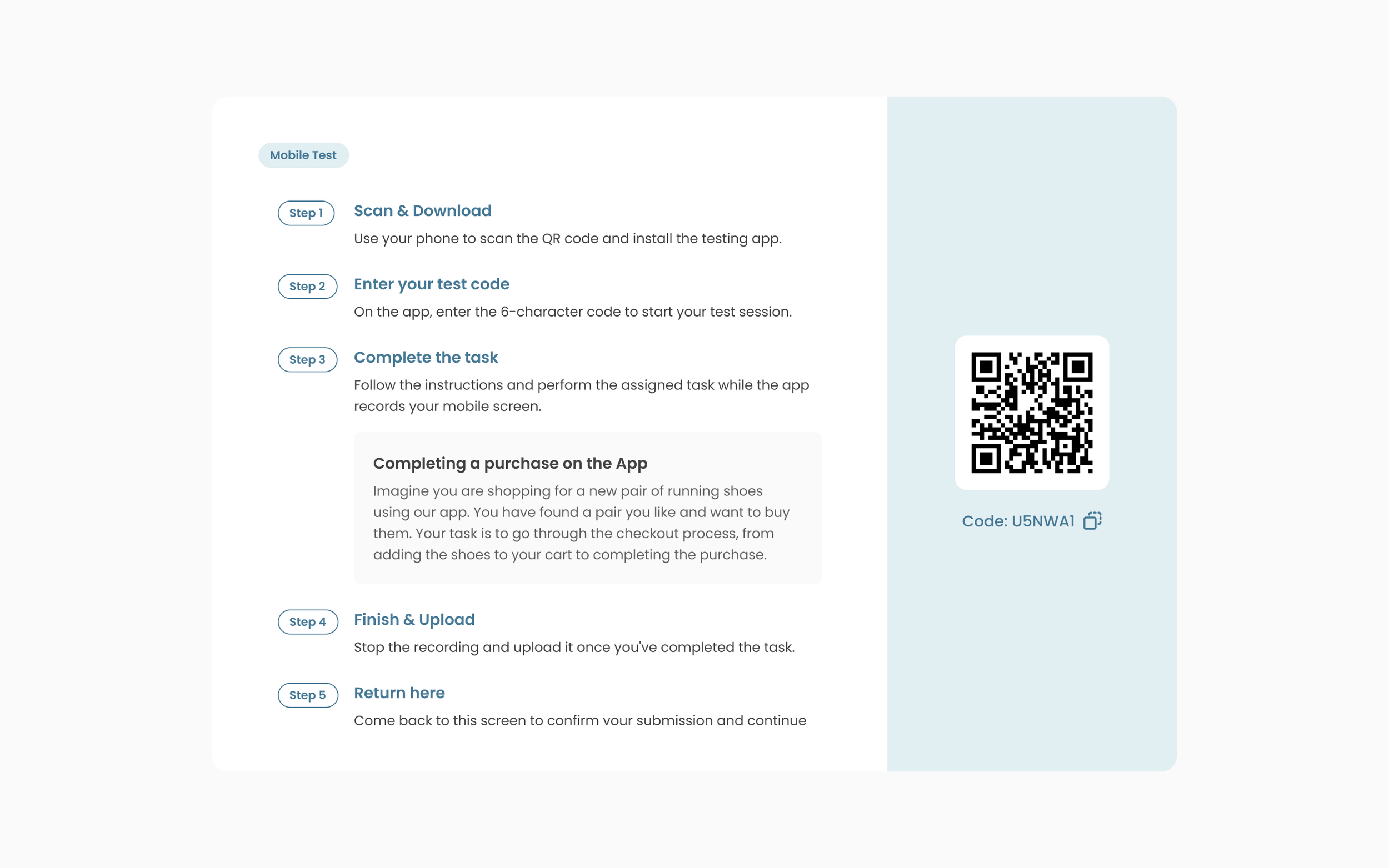

Getting Started (Desktop)

Many participants started from a desktop invite, so we needed a smooth handoff to mobile.

Participants scanned a QR code to download the app.

Outcome: Reduced typing errors and created a seamless transition from desktop to mobile setup.

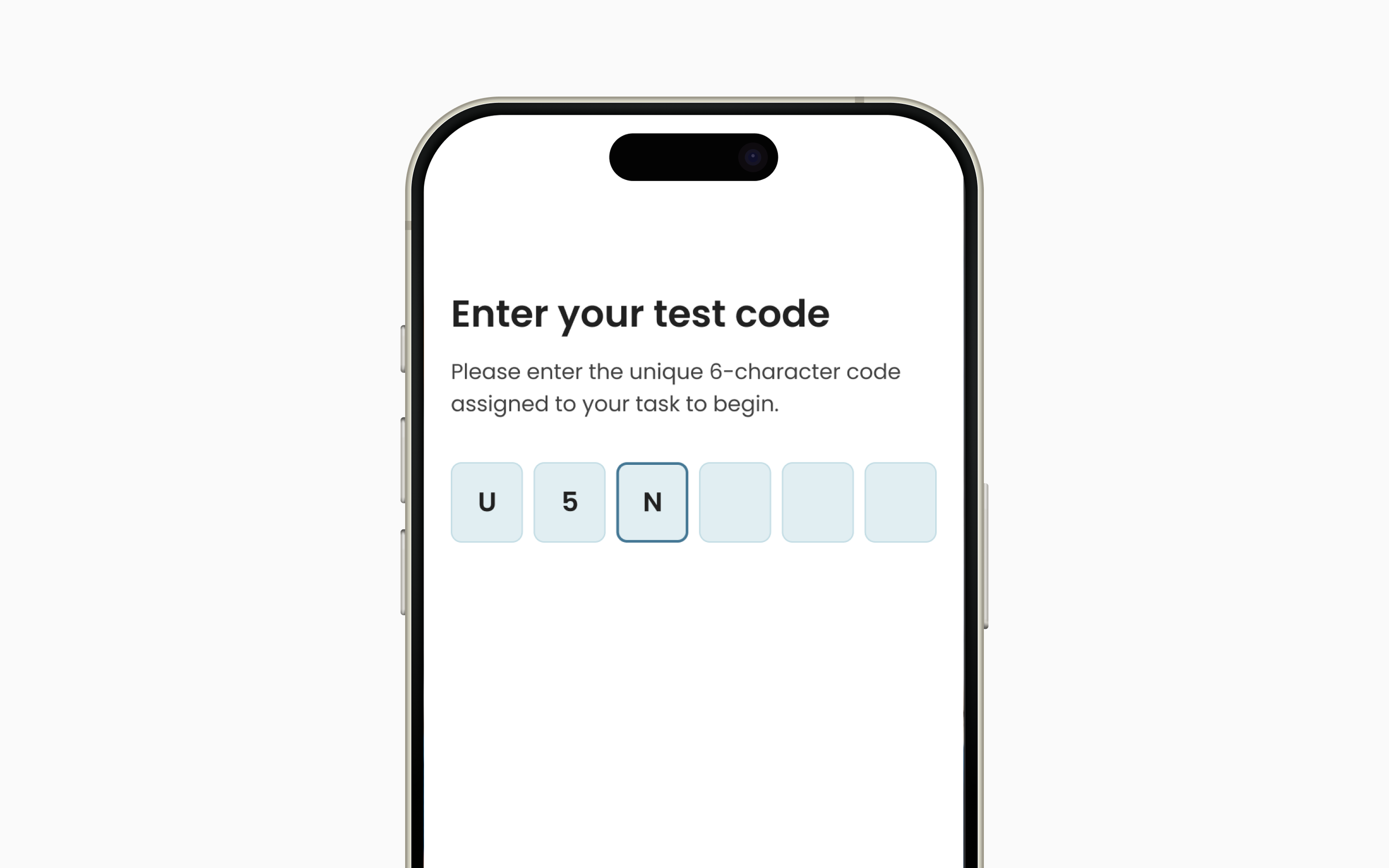

Getting Started (Mobile)

Some participants began entirely on their phone, so the flow had to be equally simple.

They tapped a download link and entered a unique 6-digit code.

Outcome: Kept onboarding lightweight (no account creation) while maintaining accurate session tracking.

Enter the Test Code

Linking participants to the correct study was critical for data accuracy.

A unique 6-digit code securely matched each participant to their study.

Outcome: Prevented misrouted sessions and simplified joining for first-time testers.

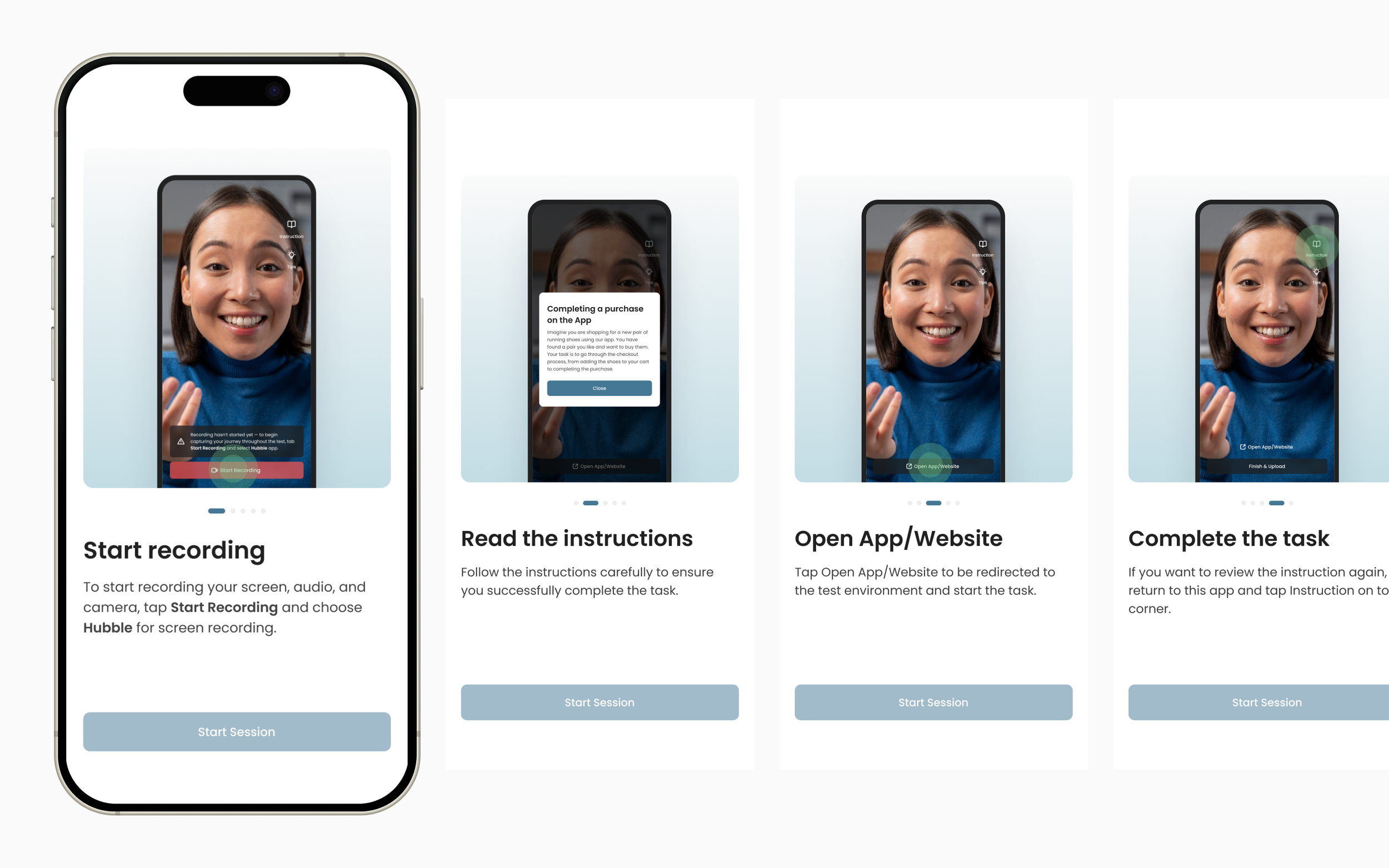

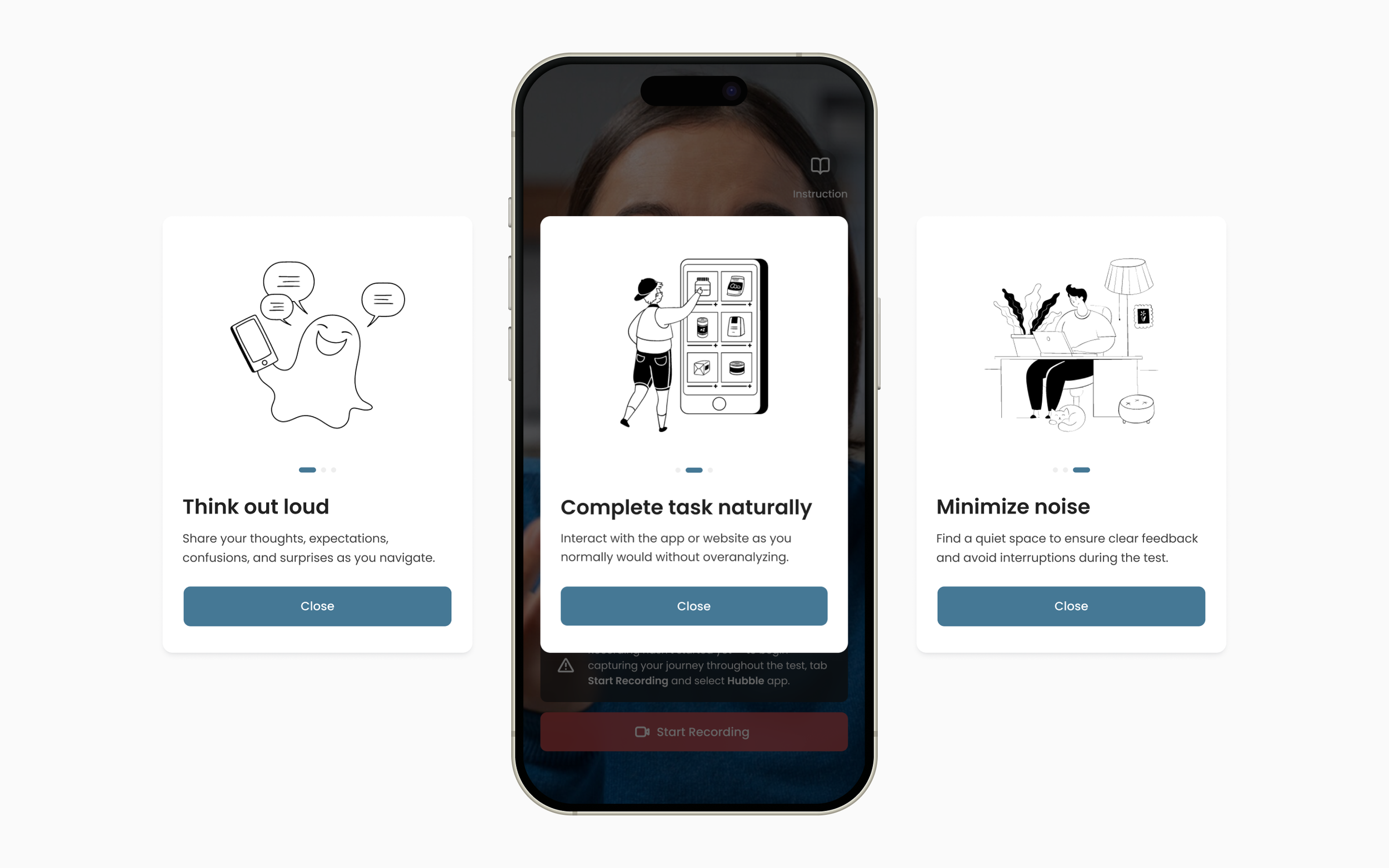

Instructions & Tips

Most participants had never done mobile usability testing before, so guidance was essential.

After entry, they received Instructions (what to do) and Tips (how to behave, e.g., think aloud, minimize distractions).

Outcome: Reduced confusion and improved feedback quality by setting clear expectations.

What to Do & How to Do It

Users often weren’t sure when recording had started, leading to mistakes.

A clear Start Recording button with task instructions icon to help user to check again whenever neede, plus a notice clarifying recording hadn’t started yet.

Outcome: Lowered error rates, avoided incomplete captures, and gave participants confidence to begin correctly.

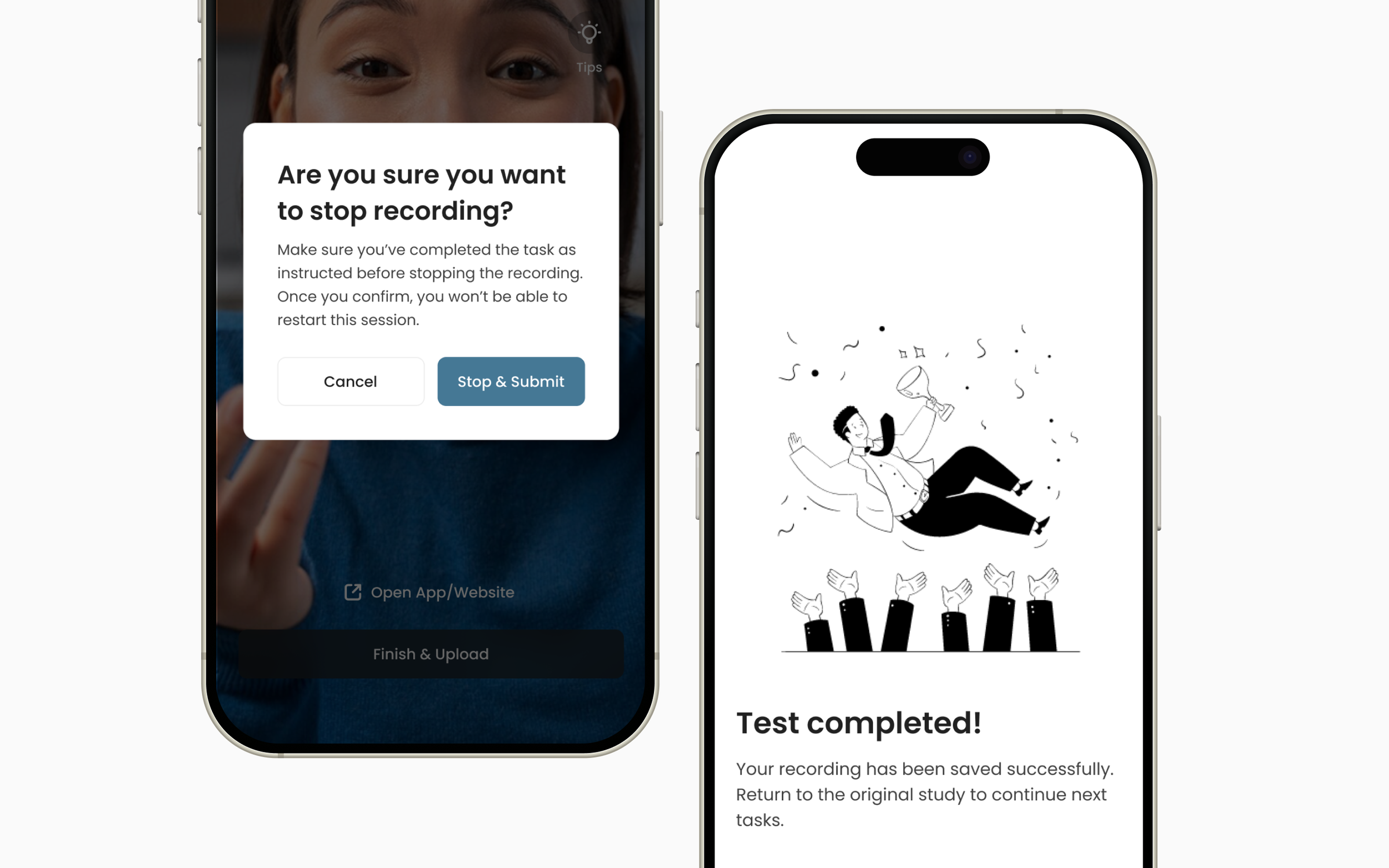

Complete the Test

The final step needed to be foolproof to avoid losing valuable data.

Participants tapped Open App/Website, then returned to finish recording; a confirmation modal and finished screen guided them back.

Outcome: Prevented lost sessions, ensured smooth uploads, and reconnected participants to the study platform without confusion.

ⓒ Jeany Lee - All rights reserved